Module 4: Deploy Microservices

Approach.

1. Switch the Traffic: This is the starting configuration. The monolithic node.js app running in a container on Amazon ECS.

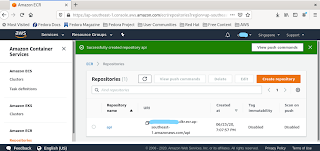

2. Start Microservices: Using the three container images you built and pushed to Amazon ECR in the previous module, you will start up three microservices on your existing Amazon ECS cluster.

3. Configure the Target Groups: Like in Module 2, you will add a target group for each service and update the ALB Rules to connect the new microservices.

4. Shut Down the Monolith: By changing one rule in the ALB, you will start routing traffic to the running microservices. After traffic reroute has been verified, shut down the monolith.

Steps.

Creation of Task Definitions for each of the service. Configure via JSON feature of ECS was used to create the three services.

Deploying the microservices

Validating that services are working

Approach.

1. Switch the Traffic: This is the starting configuration. The monolithic node.js app running in a container on Amazon ECS.

2. Start Microservices: Using the three container images you built and pushed to Amazon ECR in the previous module, you will start up three microservices on your existing Amazon ECS cluster.

3. Configure the Target Groups: Like in Module 2, you will add a target group for each service and update the ALB Rules to connect the new microservices.

4. Shut Down the Monolith: By changing one rule in the ALB, you will start routing traffic to the running microservices. After traffic reroute has been verified, shut down the monolith.

Steps.

Creation of Task Definitions for each of the service. Configure via JSON feature of ECS was used to create the three services.

Creation of Target Groups. This time, AWS CLI was used to create the three corresponding target groups.

Configuring the Listener rules

Deploying the microservices

Traffic switching

Validating that services are working